How does the database Index work internally?

Find the answers to - How do database indexes actually work internally? and why your queries are slow even after defining indexes.

Databases have always fascinated me. Since I don't understand how they work so it appears magical. You put a lot of data in a table-looking format and it just works. I can write dumb queries and the database will optimise it on its own. This is the closest thing to downloading more RAM as it upgrades the experience of the web app.

I explored relational databases to uncover this magic and to understand how they work behind the scenes.

Indexes - Secret Sauce

When you ask for data (SQL query), the system first asks the Index (if it can) for the location. The index is like a librarian.

Indexes use their own disk space. The whole objective of the index is to reduce entropy. We generate data in random order over time. And index tries to remove the randomness from it.

We write to preserve data and read to gain knowledge - Database Worker

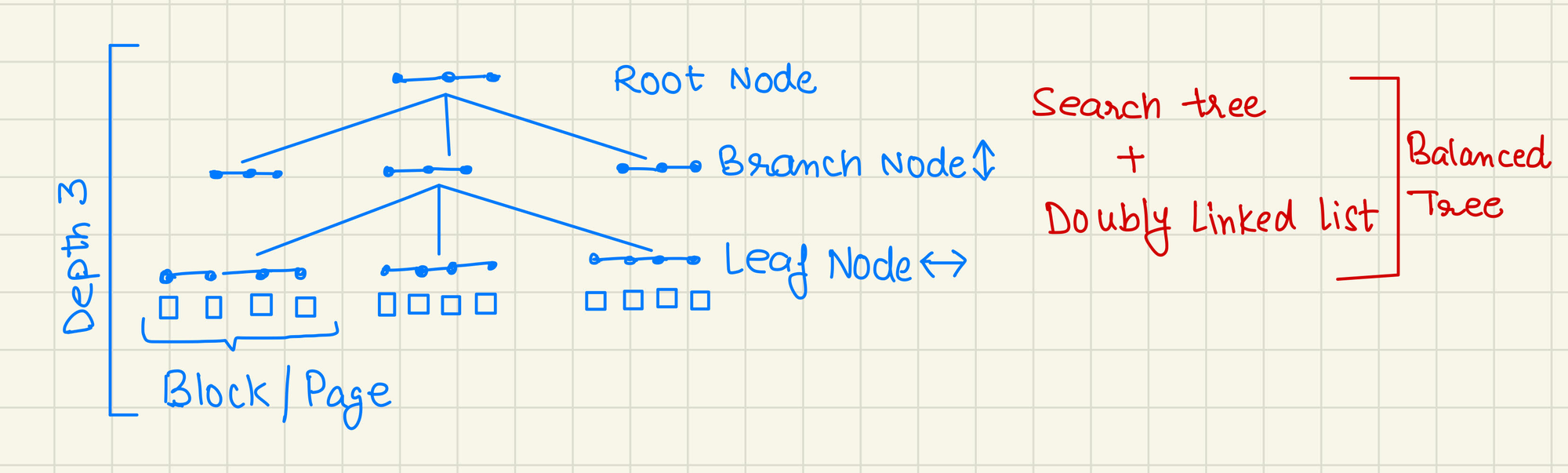

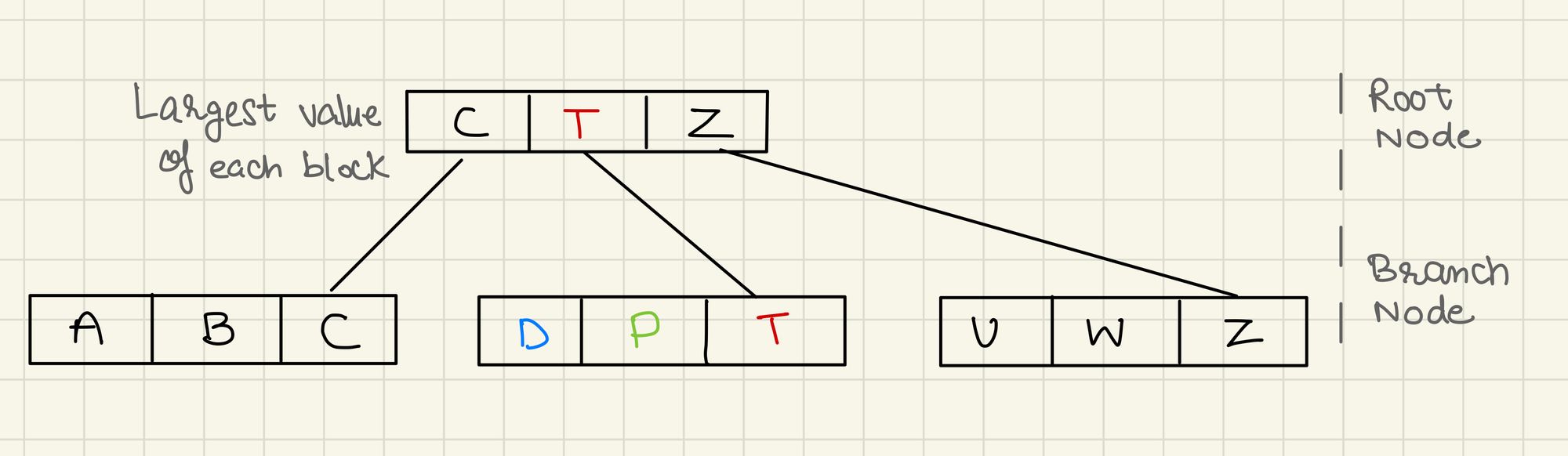

Most databases use B+ tree to store the index. It is built using a mixture of two data structures. Search Tree & Linked List.

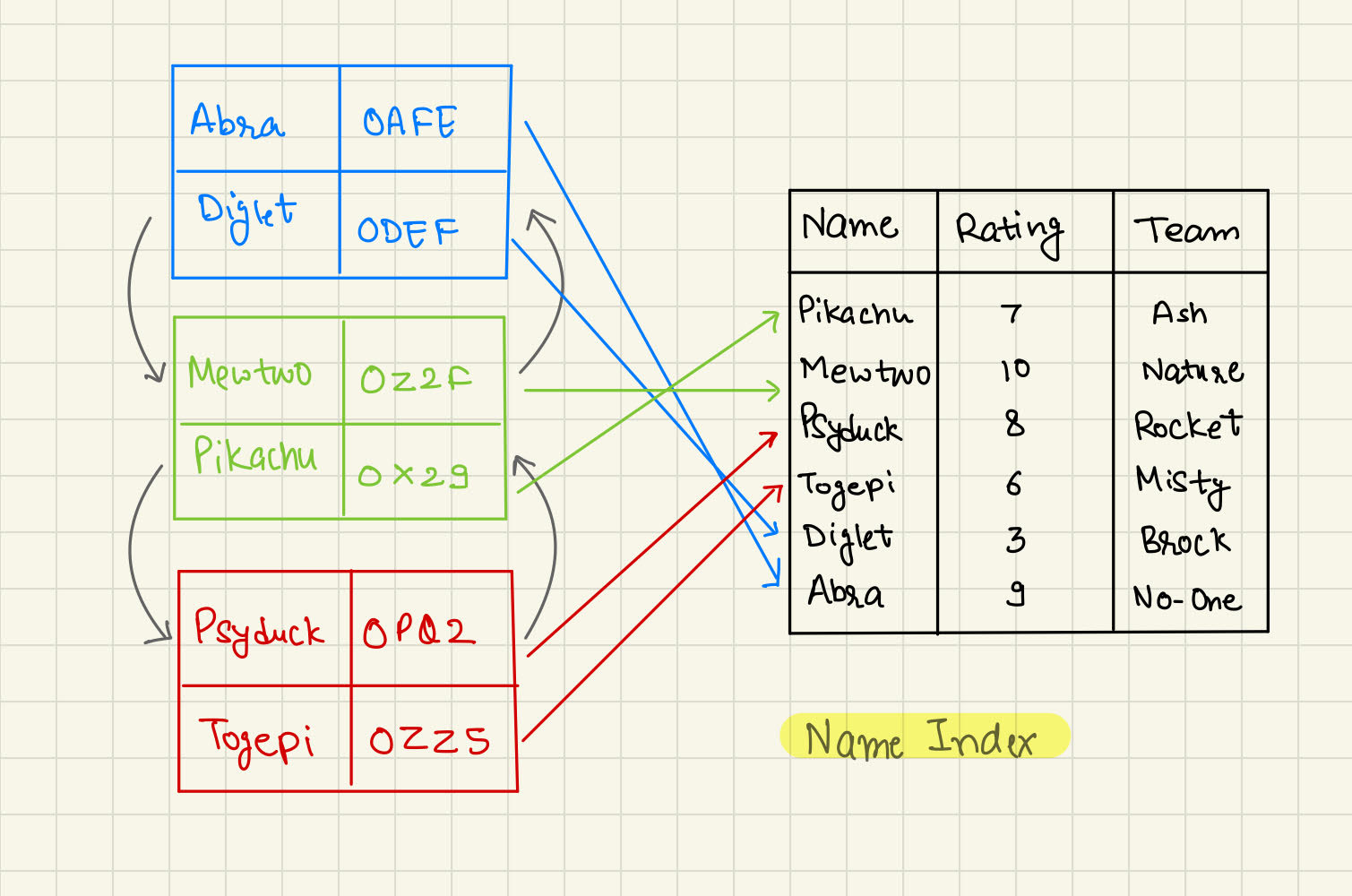

Leaf Node

It's the deepest layer of the B+ Tree. It stores the data along with the memory pointer of the row.

Some observations

- The leaf node is deepest in the B+ Tree and is the only layer where memory pointers are saved. The weird number

0ZZ5are memory pointers. - Page/Block - Smallest unit of data storage in the database. In the above example, we can store two entries in every block.

- Leaf Node is built using a doubly linked list, pointers from the blue block can jump to the green block and vice-versa.

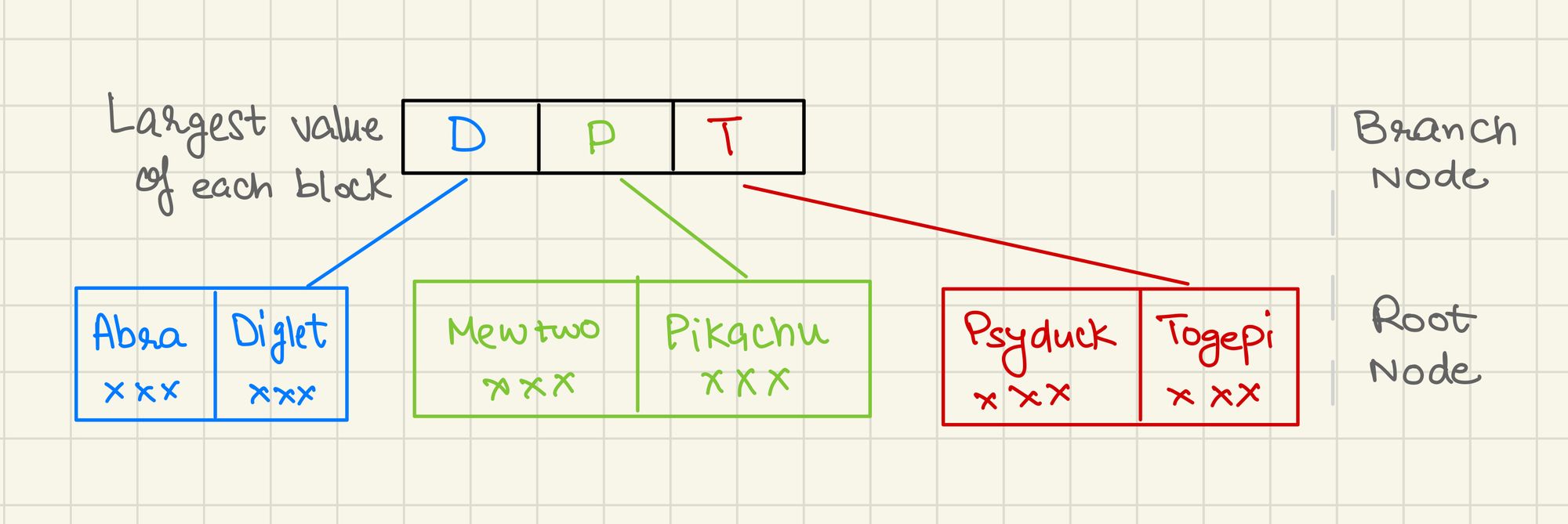

Branch Node

This node is present above Leaf Node

Some observations

- It does not store any memory pointers

- Each block contains the largest value of the next layer (like from Psyduck and Togepi, Togepi will be stored in Branch Node)

- You can not jump from one branch node to another as it's not a doubly linked list

Root Node

This is the entry point of our index

Some observations

- Similar to Branch Node, Root Node also does not store memory pointers

- It's the first layer from where the search starts

Combining All

Step 1 - Root Node + Branch Node = Binary tree-like traversal, this is the lookup part

Step 2 - Leaf Node = Doubly linked list with the memory pointer, this is to actually retrieve the row from the disk

Why my queries are slow if indexes are defined?

There would be situations where even after defining the index the query is still slow. Some of the common reasons are

Low Cardinality

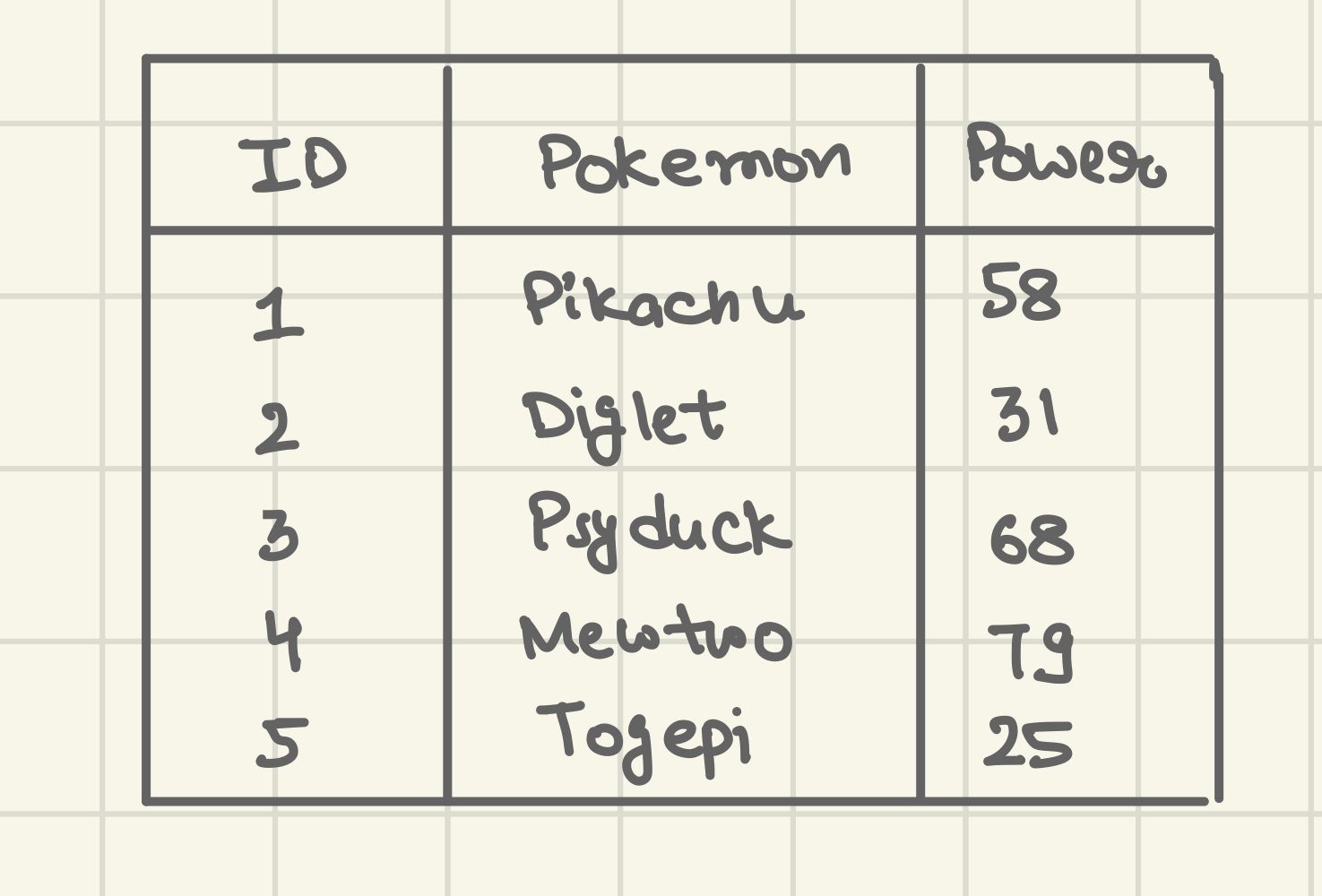

It's simply the uniqueness of the column. If the data is not unique then the b+ tree has low depth. For instance, consider a situation where you define an index on sex attributes. The index won't be of much use here, because in any case, you are dividing data into just three (maybe two!) buckets (Male, Female, Others). Or you have a field called delete status that will have only two values (true, false).

In such cases, it's better to define a composite index (index on several columns). Pick another column that has better cardinality and define a composite index on both columns instead of one column with low cardinality.

Vacuum

To achieve speed, databases and most data-intensive applications use some quick hacks. And this is the by-product of those hacks.

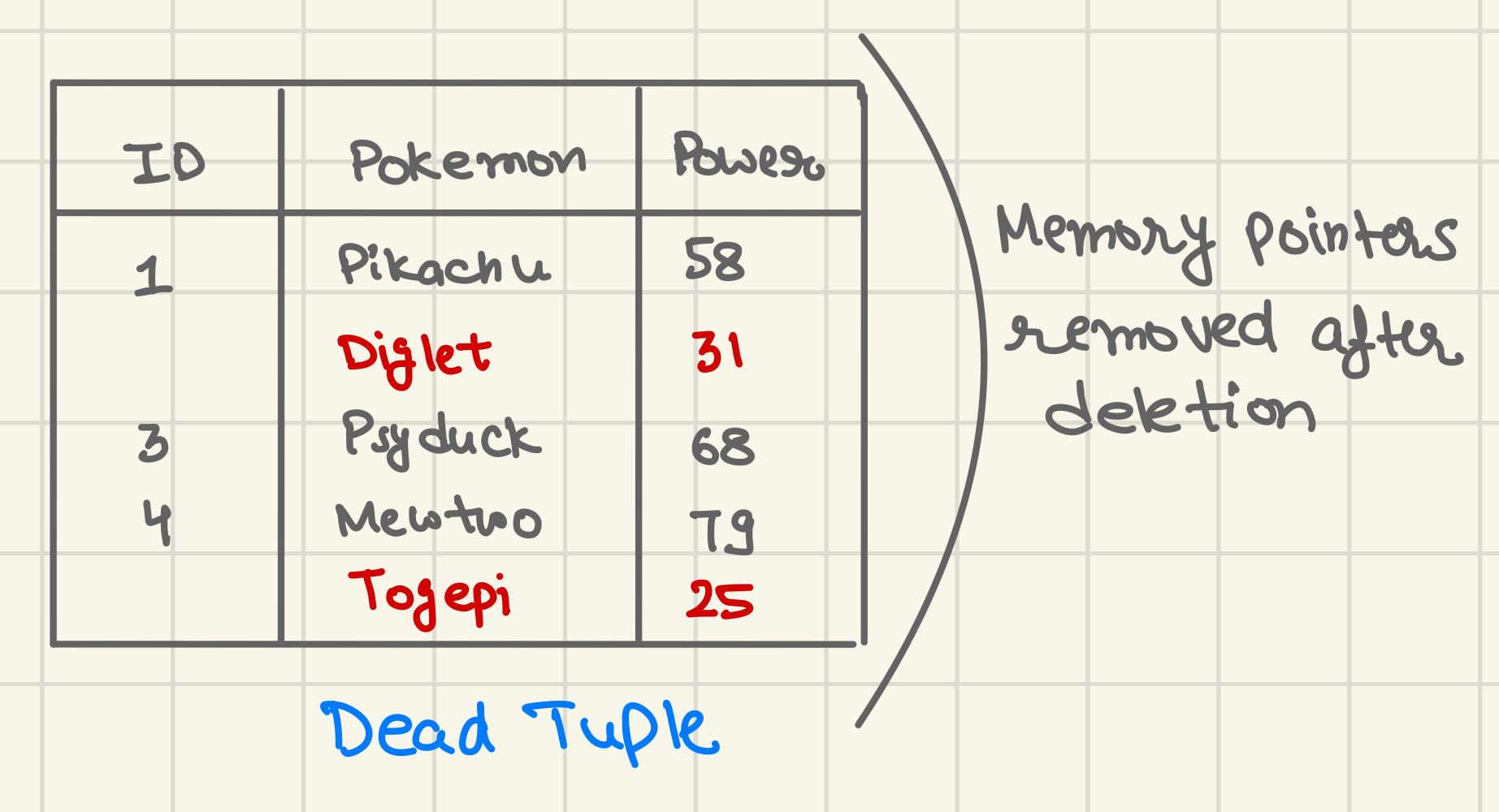

Whenever you fire Delete query, the database does not actually delete the content. It just removes the memory pointer to that location, but the content is still present on the file, just not referenced by anyone (called dead tuples), as removing the memory pointer is speedier and economical as compared to deleting data.

Similarly, when you send Update a command, the database adds a new row and updates the memory pointer to the newly written row.

Consider a situation where you send a query Update employee_salary set bonus = 50,000 after the company's IPO. Now, if the initial size of the table size was 1 GB, after this query it won't remain 1 GB (most likely 2 GB).

Over time database size will increase, which is not a major problem. But the database will also be fragmented, which means the database has to jump many page blocks to get the data. This will make the query slow.

To solve this problem, the database does Vacuuming periodically (autovacuum). It is done using two ways

- Vacuum - This process reclaims the dead tuple memory. After this process, the database size will remain the same. But if you add new data it will use the freed-up space of dead tuples first.

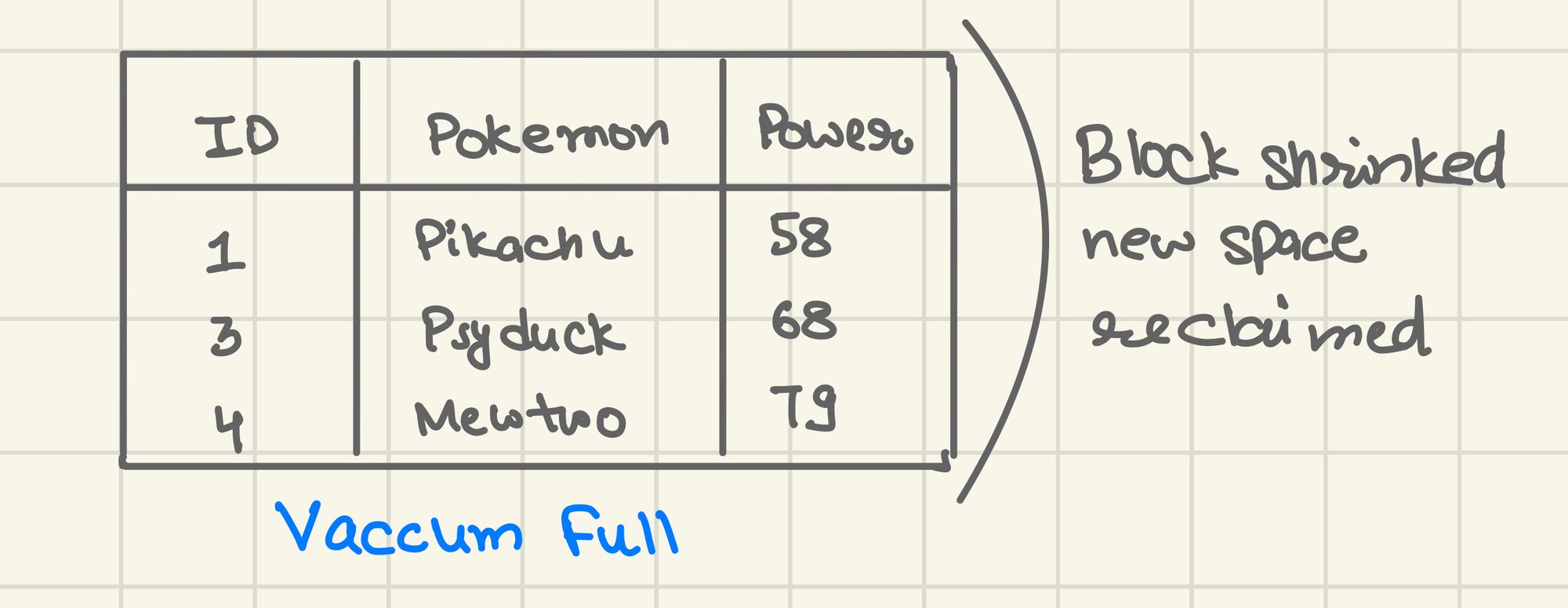

- Vacuum Full - This process reclaims the dead tuple memory like #1 but also defrags the space, which means the database size will shrink after this process. The only downside, this process locks down the whole table while it's running.

Let's visualise these concepts.

Once you delete Delete from Pokedex where Power < 50 the memory pointers are removed but the data is still in the same location. This is what we call dead tuple, same happen in case of an update.

Once you run Vaccum, the data is washed off, so that new data can take its place. So the block size remains the same after the Vaccum process.

But if you fire Vaccum Full command, then the block will be resized to free up the extra space. This is why this operation is slow and locks the whole table.

In case, you are running Vaccum Full to regain some space, this trick won't work as Vaccum Full first creates a full copy of the table before running any cleanup.

Row Access

The objective of the index is to find the needle in the haystack. But retrieving that needle is a slow process if it's not part of the index.

For instance, say you have a composite index defined on name and location and your query is like Select name, location from earth where location = area-51 This will give you quick results as all the data required is in the index.

But if you say Select name, location, age from earth where location = area-51 the result will be slow, as age is not available in the index, so the database will now go to the disk and retrieve the data for age. Disk lookup is mostly the slowest part of this whole process. Doing select * is the worst thing you can do to kill the performance. Always mention the fields that are required for operation.

Optimizer

The query optimiser is the second secret sauce of databases after the index. It's the logic that decides as what's the best path to retrieve the data. Say you are using 5 tables in the join, in which order should SQL pull data from each table and then join so as to reduce the processing time is the job of the query optimiser.

There are situations where even though you have an index defined on field in where clause but database may go for a full table scan as the optimizer says it's the best way. Most of the time the optimizer is correct, but there are situations where it may not guess the correct path. Like the database is not vacuumed or the ORM is not letting the database run optimiser. In such cases, you can analyze the query by appending EXPLAIN ANALYZE to see what's going on under the hood.

Tip - How to find areas where optimisation is required?

- Log the slow queries in your database, this setting is available in most of the RDBMS by default

- Backtrace the origin of the slow query and debug as to why it is slow